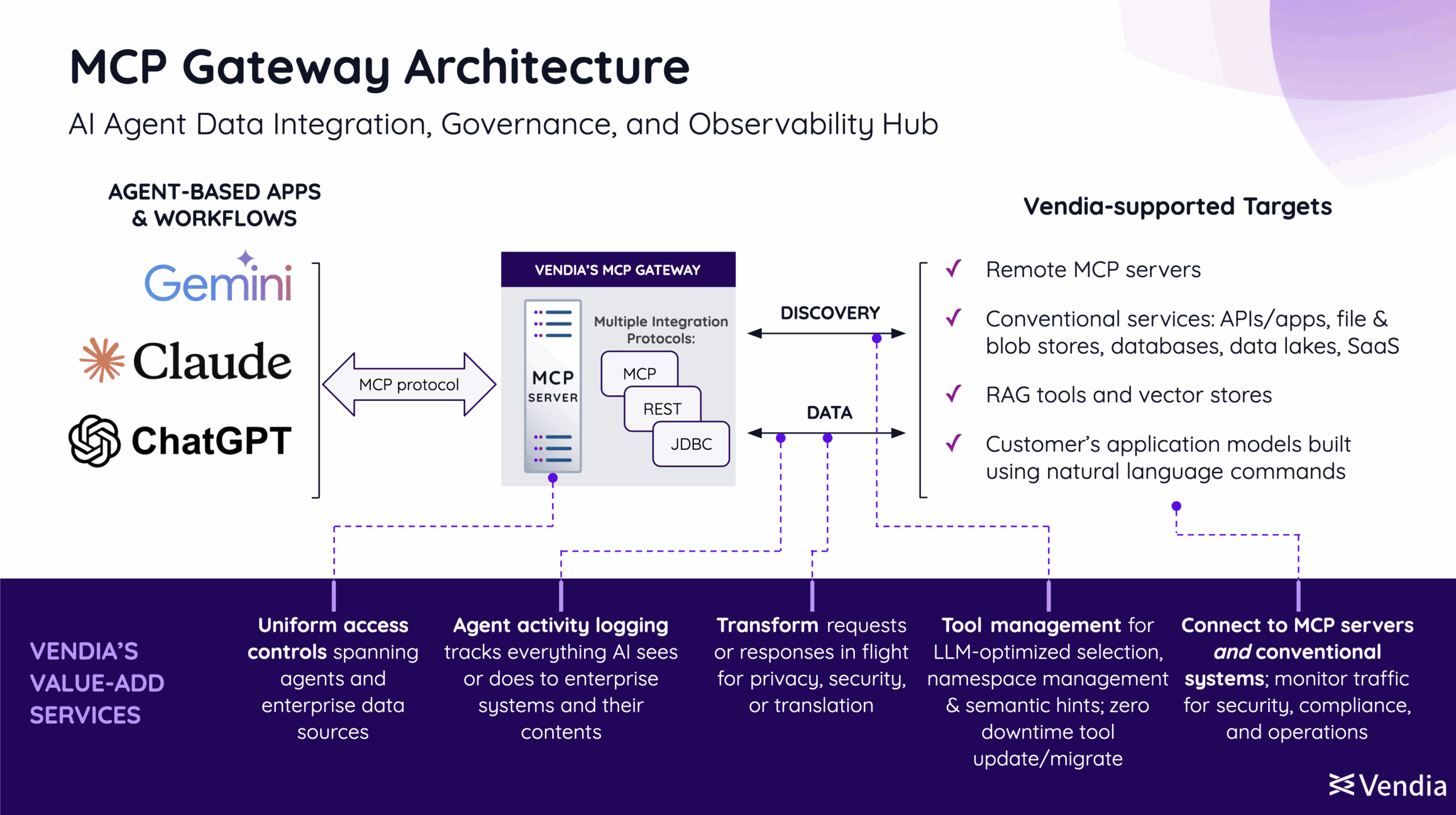

As enterprises adopt AI agents, they face a critical challenge: how do agents securely access dozens of internal APIs, databases, and SaaS tools without creating ungovernable sprawl? A familiar architectural staple holds the key: API gateways. Now enter the MCP gateway—think API gateway that “speaks MCP” and is purpose-built to safely connect AI agents to your enterprise systems and data.

You’re probably familiar with the idea of an API gateway: It’s a classic facade pattern that provides a layer of abstraction between an external caller (often a REST API over HTTPS) and that API’s internal implementation. As we transition from conventional web and mobile apps to autonomous agents built on Large Language Models (LLMs), the concept of a gateway has also evolved. MCP gateways sit at the critical boundary between nondeterministic AI clients and mission-critical enterprise systems and play a vital role in adding safety, scalability, and security.

Here’s a holistic look at how the traditional strengths of an API gateway apply to the new agentic frontier and where the two diverge:

- Abstraction and aggregation design patterns: Abstraction is one of the primary benefits in both API and MCP gateways. Callers don’t have to know (and generally aren’t permitted to know) how the API is actually implemented. It could be mapped 1:1 to an internal API, implemented by multiple calls in a complex orchestration, or vary based on details such as the client type. API gateways can also aggregate multiple internal services and present them to clients as if they were a unified collection, handling complexities such as namespace management (when API names from different internal services collide or aren’t suitable for external exposure). Without this help, every AI client is “hardwired” to one or more sources, turning upgrades and replacements into breaking changes that require agent downtime.

- Governance and Security: API gateways are often a security perimeter and typically provide authentication/identity and authorization services, such as per-user access controls. Without the benefit of this uniformity, every MCP connection requires a separate solution.

- Observability: For similar reasons, it’s common for API gateways to provide (or integrate with) logging and monitoring services.

- Operational services: Rate limiting, load balance/traffic steering, HA, autoscale signaling, and other mechanisms designed to protect potentially sensitive or vulnerable backend services from excessive or unexpected traffic or to optimize capacity and uptime. When hooking up what are often mission critical services and data to a nondeterministic LLM, it’s important to have the equivalent of a GFI outlet or circuit breaker in case something goes wrong.

- Data services: API gateways can check and enforce integrity constraints and transform or redact data in flight. MCP gateways can play a similar role and potentially take this even further by redacting or removing sensitive PII fields or keeping important authorization credentials out of the LLM’s memory.

- Discovery/catalog services: While API gateways are primarily designed to serve as a “data plane”, they can also act as catalogs, enabling a client to discover which services are available and how to use them (parameter and result types). A gateway might also offer metadata or value add services, such as documentation, PII flags, etc.

What makes an MCP gateway different?

While an MCP gateway performs many traditional API gateway duties, it must also address the unique needs of AI agents:

- Native MCP protocol support. An MCP gateway must implement the MCP server protocol standard so that it can be connected anywhere an MCP server could go.

- Solving tool selection and namespace management problems. Catalog and discovery services are optional features in an API gateway but they’re mandatory in MCP gateways, because the MCP protocol includes them. MCP gateways can help solve “naming collisions”, such as two backend APIs that both have a “getStatus” call, enable administrators to selectively enable individual commands, and enhance automated LLM tool selection by providing additional semantic context on tool usage.

- Acting as a router: MCP gateways often connect to other (remote) MCP servers, acting as a router by proxying traffic between the AI client and multiple backend MCP servers.

- Acting as an API gateway file server. Many of the services and data sources enterprise agents need access to aren’t other MCP servers: They’re conventional REST APIs, unstructured content stores like Amazon S3 or an on-prem filesystem, and so forth. MCP gateways often also include support for these existing systems.

- Acting as a data integration hub. The most sophisticated MCP gateways don’t just connect to other systems, they offer powerful data services for AI—helping bridge data silos and fix “MCP sprawl” by giving LLMs a single, consistent view across disparate sources, providing MDM and other translation tools, implementing custom application models, handling cross-region data resilience, and offering performance optimizations such as results caching.

- Unified governance and observability. Placed at a strategic spot in the architecture, an MCP gateway can provide essential value-add services that would otherwise be difficult to implement. It can enforce uniform access controls across many different data sources and systems and maintain a comprehensive agent activity log to track everything the AI sees or does within your enterprise systems.

Why do you need an MCP gateway?

You might wonder if this added layer is necessary. If you’re hard-wiring a single agent to a single MCP server such as Github for a personal project, a gateway is probably overkill.

However, if your company plans to have multiple production agents connected to a multitude of mission-critical APIs, third-party SaaS services, and content stores across dev, test, and production environments, then hardwiring everything in a UI will become complicated, error-prone, and ultimately risky: It’s the moral equivalent of bypassing the circuit breakers in your house and crossing your fingers.

Just as API gateways became essential for web and mobile applications, MCP gateways have emerged as a necessary element in an enterprise “AI stack.” They make agents easier to build, manage, and scale while ensuring that your company retains control over the security, observability, and integrity of your data.

How do I get started?

If you’d like to see what an MCP gateway can provide, check out Vendia’s MCP Gateway sandbox, which lets you safely explore how a connected agent operates on operational data.

Or sign up for a free trial and get a production-ready gateway that connects to your own APIs, cloud object stores, and other MCP servers without charge!