This article was also published on LinkedIn

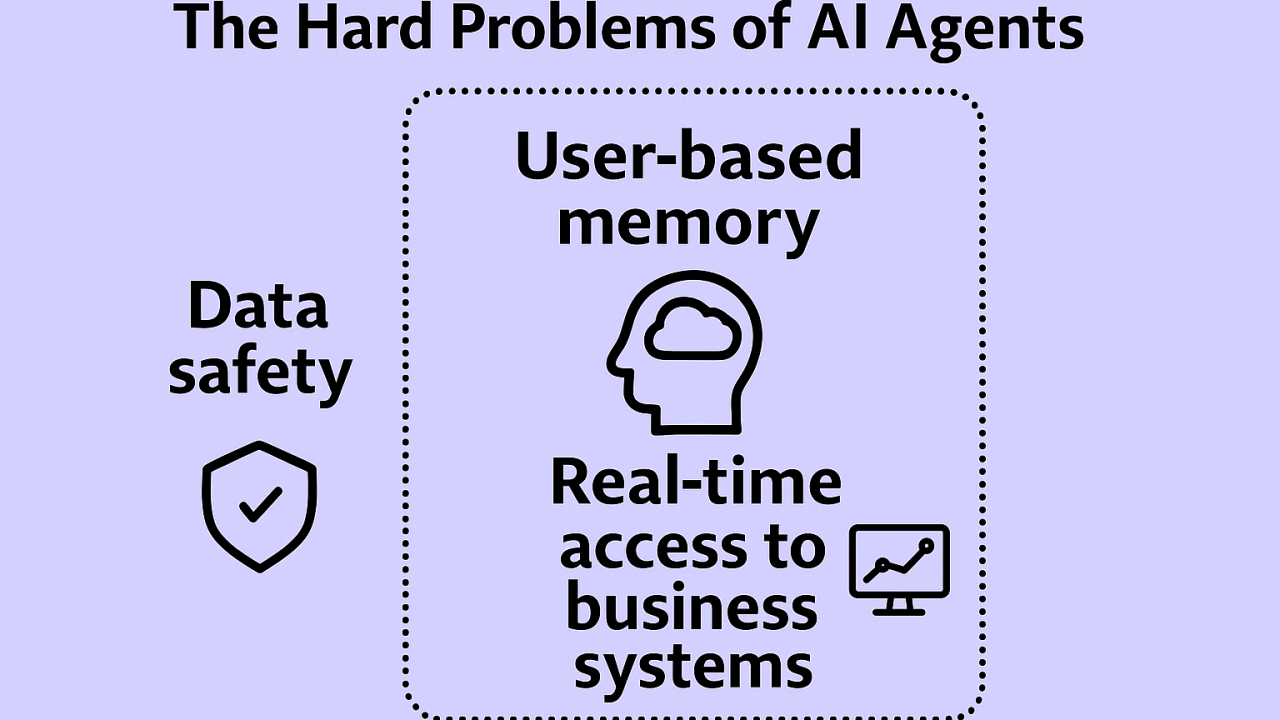

Turning state-of-the-art AI into enterprise solutions requires not just advanced LLMs capable of multi-step planning across a broad corpus of domains but also more effective solutions for per-user memory, data safety, and enterprise connectivity.

LLMs—already incredibly powerful mechanisms—are advancing rapidly, gaining the ability to plan multi-step operations, break larger problems down into subtasks, and addressing more (and more technical) domains. Together, these improvements are beginning to enable them to be applied to more complex human tasks like project planning, solving math problems, and writing software. The companies that make LLMs are also growing increasingly sophisticated in finding automated ways to train, test, and optimize these complex algorithms at scale.

LLMs will continue to advance and improve, with enormous amounts of money, computing power, and both human and machine intellect being pointed at them. But there are two interesting caveats to this progress. First, it’s far too complex for most organizations to do themselves. General purpose (or “foundational”) LLMs and their associated construction, testing, vetting, compliance, and so forth will almost certainly be the province of a very small number of companies with the technical staff and know-how to create and deliver them in a cost effective fashion. Furthermore, even if LLMs were perfect, they’re not a solution for enterprise AI in isolation. Like a high performance database, they solve an important and specific problem, but they still require additional infrastructure around them to create a complete application, like a usable AI-based customer service agent. While there are a broad range of problems that need to be solved for, three challenges stand out regardless of the use case or sector.

User Memory: The Hidden Bottleneck in Scaling AI Outcomes

While large language models (LLMs) excel at generating insight and holding fluid conversations, their effectiveness as persistent AI agents hinges on one critical capability outside of themselves: memory. This goes beyond ephemeral conversation context and includes structured, durable recall across both short-term sessions and long-term experiences:

Short-term memory—often referred to as the context window of an LLM—is generally limited to recent conversation history. It allows for continuity, but it’s inherently volatile and bounded. As soon as that context expires (or becomes too large), the model forgets. Without augmentation, AI agents lose track of previous threads, user intent, or system state. This is why in a longer conversation, AI can start to feel dumber instead of smarter: It’s literally losing track of everything you both said earlier in the discussion.

Long-term memory introduces entirely new challenges to LLM-based agents. To be useful, memory must do more than store—it must be compressed efficiently, indexed intelligently, and surfaced contextually. That’s not trivial. Human memory works because relevance is emergent and contextual; AI agents must simulate that via embeddings, vector search, and adaptive retrieval. Storing terabytes of user activity is useless unless the model knows what matters and when to recall it. While LLMs have gotten very sophisticated, very quickly, long-term memory is only at the beginning of the innovation curve.

Furthermore, accessing both types of memory at inference time has to be fast, scalable, and relevant. Retrieval-Augmented Generation (RAG) systems are one approach, but even these struggle when user data spans multiple domains, formats, and emotional contexts. Add enterprise-grade requirements—like permissioning, auditing, and schema evolution—and the problem compounds exponentially.

Ultimately, building memory systems that can capture the richness of user interests, habits, and histories—while remaining performant, secure, and meaningful—is one of the most complex obstacles to turning LLMs into broadly useful agents. It’s not just an engineering challenge; it’s a cognitive one.

Enterprise Connectivity

If better and more useful user memory is the critical “depth” challenge for enterprise AI to become successful, then connecting LLMs to enterprise system and data is the ultimate “breadth” challenge.

Pick any function, system, or data in a company today: HR, payroll, sales & marketing, engineering/manufacturing, distribution, supply chain, strategy, customer support—literally every one of them can benefit from added the efficiency, scale, insights, or automation that AI is potentially able to deliver. But the corollary is that all these systems need to be somehow hooked up to a company’s data and other IT infrastructure to make that outcome possible.

Moreover, to be useful, the information in these systems must be up to date. Simply giving an AI agent access to a copy of information from last week or last month isn’t helpful when it comes to fast-changing business data like orders, shipments, travel, account balances, and more. The AI will need to be able to see (and interoperate with) real business data in real time. This rules out approaches like retraining or RAG, where there’s a lag involved in creating the data available to the AI and in which that data is copied and unable to be updated by the agent.

Protocols such as MCP (the “Model Context Protocol” introduced by Anthropic) help make enterprise connectivity possible, but platform-based solutions like Vendia’s are also needed to help with the challenges of broad (and safe) data and systems integration at scale. Simply presenting an LLM with hundreds or thousands of data silos is as likely to overwhelm an artificial intelligence as it does human intellect. Reconciling, harmonizing, and integrating both first- and third-party data is a common prerequisite for any enterprise application, and that’s going to be needed for AI-based ones as well to make sense of the vast array of enterprise IT infrastructure to which they’re being exposed.

While companies have selectively exposed their systems to mobile apps, external APIs, and SaaS-based services in the past, AI apps are categorically different in both the depth and breadth of their scale, and of course in the urgency with which these outcomes are being pursued in every industry and sector.

Data Governance and Safety

If it were only a problem of connecting existing IT infrastructure, including databases, applications, SaaS systems, etc., to AI-based clients, this alone would be a massive challenge. But connectivity to AI also requires care: The same attributes that make LLMs so vastly powerful and useful also make them dangerous if given unchecked access to mission critical data and systems. Vast intelligence without human oversight, the ability to generate code on the fly, unpredictable outcomes in the face of unknown user inputs…all of these mean that it’s critical to control data before an LLM sees, not after.

Prompt injections and other “after the fact” (post-exposure) controls over data have not proven effective…and given the way LLMs are designed, will probably remain ineffective for the foreseeable future. That means that data access controls, privacy-preserving data transformations (such as replacing a social security number with “X”s), data reconciliation, and other control steps must be taken prior to exposing an LLM session to the dangerous information. This necessitates a “firewall” of sorts around each and every AI agent, both controlling what they can see and do.

Once data does reach an LLM, tracking what it does from there—what data is reads, what files it writes, what systems, processes, or workflows it initiates or influences—becomes equally important in order to monitor an AI client’s impact on both users and the enterprise itself. This is central role of an AI ledger, an outgrowth of conventional application logs, but enhanced to deal with the unique challenges of incorporating human language inputs and outputs, dynamic access patterns, self modifying code, and other aspects of LLM-based connectivity. And just as with human attackers, it’s critical to record both what an AI agent attempted to do, as well as what it actually succeeded in doing, as that gap can represent a critical lack of control or an emergent security threat.

While many data-related issues can be solved by preventing initial access to the incorrect information, an AI agent’s long-term memory represents a particularly difficult problem when accessibility for some piece of information transitions from “OK to communicate” to “not OK”. Consider an AI chatbot that, among other things, suggests relevant products and services to customers as it interacts with them. These marketing-related conversational experiences are regulated by various laws, including GDPR for Europen citizens and CCPA/Prop 24 for California residents in the US.

Now, suppose that a European citizen demands “erasure” (removal of their name from allowable marketing targets) or a California citizen conveys that they are underage and lack parental approval for the receipt of marketing information. At this point, an AI agent’s session has been “polluted”—its short-term memory (context window and re-prompting mechanisms), workflows, long-term memory, and other mechanisms have been stylized to market to this user, and it now needs to either forget that ever happened or somehow be informed that all those memories, workflows, prompts, and other information have become unacceptable. This sort of “pivot and purge” isn’t something that today’s AI agents or their underlying memory banks and LLMs are particularly well designed for, and yet these laws are unlikely to be relaxed, meaning that some solution must be found. Until then, Gordian Knot solutions such as “factory resetting” that user’s session may be required to comply with relevant laws.

Turning AI innovation into safe, proven customer solutions requires solving for all these aspects—user privacy, data integration, information security, data sovereignty, and governance—when exposing corporate systems responsibly and safely to AI-based solutions. Keeping AI’s intelligence in check may prove harder in the end than making it feel intelligent in the first place!

Conclusion

LLM capabilities are growing quickly. But to continue making rapid progress at turning this phenomenal technology into viable business solutions, we need to solve for more than just understanding and generating human-centric speech. Larger and more effective long-term memory is needed so that AI agents don’t forget what they’re supposed to be doing or drop critical insights for the customer they’re trying to assist. Broad connectivity between AI clients and enterprise data and systems, mediated through MCP, is needed so that AI agents can interact with real-world (and real-time) information in order to be useful to end users. And throughout all of this, careful attention to, and control over, how both corporate and personal data gets used by AI-based applications is needed to ensure that compliance, security, and safety goals are being met.